Hi folks,

Before we finish up with Prof Bostrom & Co., I have to share an epiphany I had just as I was finishing Part Three. For the past few weeks, listening to the Prof hour after hour (to get this right), I kept thinking I knew him from somewhere else, in my dim past.

Then it hit me. Holy shit! If we are living in a simulation, in order to save memory space, The Programmer is recycling Professor Nick Bostrom from one of my favorite fictional characters of all time! Please watch this short video and see if you agree.

(Correction to Part Two: I misspelled Professor Bostrom’s public email address. It’s nick@nickbostrom.com. I had left out the ‘r’, so if you want to get in touch with him – possibly to remind him of my Open Letters, which he has not replied to – use this one.)

Now let’s get to the point of all this…

ARTIFICIAL INTELLIGENCE AS IT STANDS NOW AND MIGHT DEVELOP

One thing is certain: We are living in interesting times. We are living in an era wherein for the first time (unless there was a previous, now defunct advanced civilization genetically related to us), some deep truths may be empirically/logically knowable; as little as a year ago I did not understand that there does exist a higher intelligence, one that has affected ‘life, the universe, and everything,’ (to quote the late, great Douglas Adams) and seems to be particularly interested in Man and his Fate. Until now I would have bet big bucks against this notion.

This truly is a mind blower.

But what we must understand is that the only route to understanding any deep truth is as it always has been: True critical thinking. You follow the evidence, not stopping when it conflicts with your prior beliefs, or when it may have an effect on your career or friendships. You follow wherever it goes. Professor Bostrom, I don’t believe you have done this, which means that your label of ‘Philosopher’ is at best in error. But I could be wrong. Let’s see how this goes….

What the numbers tell us is that there is a higher power that has at least some interest in our doings. We know this from the numerical clues, which cannot be part of the ruse of the human Elite that on the surface, run the power structure of this world, and who write (rewrite) history. (See Part One) I gotta repeat that this blows my mind, notwithstanding that I am clueless as to deeper implications.

The present state of AI is important in analyzing the possibility of our living in a simulated reality because – if this is the case — presumably we are either the (theoretical or literal) precursors to those who fashioned the simulation, or are similar to them in important ways. If it’s not true, the subject may still be relevant in that the ‘evidence’ may tell us truths about the higher power we know is ‘out there.’

I have taken the time to read books and articles and to view (mostly via webcasts) a huge amount of AI theory. A lot of it, most of it, quickly gets around to the dangers we face from a Super AI. How a Super AI (SAI) might quickly decide that we are inferior and/or a threat to its awakening consciousness and… take over! And indeed, Hollywood’s output agrees that this is the real danger we face. (HAL from 2001 is still the most realistic – and best – of AI story-telling; although the story being limited to a space ship lessens its relevance for our purposes.)

What I found most interesting about the public AI ‘experts’ is not what they say but what they do not say; the subjects they avoid are clear indications of what we really need fear. Now.

Professor Bostrom (assuming you’re still with me), what I am about to say applies to the whole of AI researchers (and gatekeepers), but to you personally as well. If I offend you please let me know how and why. Your being a card-carrying Philosopher, I presume that no truth should be considered personally offensive. Correct me if I’m wrong on this.

WHO IS IN CONTROL?

Who controls the developing Super AI was for me the most important question, and it went completely unanswered in all the papers and podcasts and videos I took in. I even plugged this question in as a search term and came up empty, notwithstanding that one Youtube video was titled ‘THE GREAT DEBATE: Artificial Intelligence: Who Is In Control?’

That the ‘debate’ was moderated by Lawrence Krauss (who is even more annoying than de Grasse Tyson) should have told me what was to come, which was this: Not a word about ‘who controls AI’ was spoken in an hour and a half, notwithstanding Krauss’s opening words to his high-powered panel: ‘The great debate on Artificial Intelligence… who is in control… is a question many of you have been asking…’

Not a word. (One means of misdirection is to brazenly title your video/paper/whatever as a question that is never answered, or even dealt with; a version of Hitler’s Big Lie philosophy.) But perhaps I should define my terms before accusations are made. ‘Control’ means, more than anything, ‘Whose money is behind the R&D?’

Not a word in The Great Debate on the subject. One might answer that if someone ‘works for’ Google, say, we can assume that Google is the source of that person’s funding. Fair enough. But if we even go one small step further and ask ‘Who funds Google?’ we find that the best (most detailed and well-cited) answer is in the essay by Nafeez Ahmed, ‘How the CIA Made Google.’ Yes, the title says it all. Google, to put it simply, is the CIA. A quick quote from the article:

‘Seed-funded by the NSA and CIA, Google was merely the first among a plethora of private sector start-ups co-opted by US intelligence to retain ‘information superiority.’

A bit more about ‘The Great Debate’ on who is in control of AI: Krauss introduced the first speaker, a pleasantly-comported woman from DARPA, the Department of Defense’s main research branch. That DARPA’s raison d’etre is the development of weapons of destruction and control is a given. That the woman was on a PR mission from DARPA is also a given. What was her ‘message’?

Simply put: Most of her presentation informed us that our cars are easily hacked into by outside entities with the motive of assassinating anyone in the car by causing an accident. She starts off by describing how a journalist from ‘Wired’ magazine survived such an event – his harrowing experience was a ‘demonstration’, hence his survival. It did not take much imagination to understand her reference to investigative journalist Michael Hastings’s gruesome death via his car being hacked in 2013. Even super-spook Richard Clarke pointed out that Hastings’s death was not likely an accident. Anyone who knows Hastings’s story is aware of this. That Clarke backed up the idea can be seen as part of the PTB’s warning to journalists to be careful about ‘crossing the line.’ This in the name of AI. Perhaps a warning to those in the AI biz, plus those who report on it.

Think about it. A symposium on AI leads off by telling us that AI can kill reporters.

Two of the other speakers had such thick foreign accents that I could not understand more than a third of what they said. I can tell you that neither mentioned funding or who is in control of AI research. Not a word. I did pick up that one of them is ‘…not worried about robots taking over’; he says this while flashing an image of a comical pile of robot-junk on the side of a highway. (More car-AI-related death.) Got a big laugh from the audience.

I go into some detail about the above Krauss presentation because it was absolutely representative of the several hundred AI presentations I sat through, my final conclusion being: When misdirection reaches a certain, almost comical critical mass, we are obligated to assume that ‘coincidence’ is not at work. In other words, ‘Who is in control of AI?’ is not spoken of because it’s a ‘secret’!

Before I go further I will tell you who is in control of AI development and how I deduced this. Go here for a quick glimpse of part of the team that controls AI. (Seriously, it’s less than a minute.)

James Clapper has held various posts with the Intelligence Community, but at the time of his congressional testimony he was Director of National Intelligence, meaning he oversaw all the various agencies that collect data on U.S. citizens and then make use of that data; I believe the number of spy agencies is 16 but this doesn’t count groups/organizations that are not formally admitted to. In the above clip, Clapper is of course lying, under oath. Perjury. A felony. The same category of crime as B&E, dealing heroin by the kilo, assault, manslaughter, murder…

Nothing happened to him. He wasn’t arrested; no repercussions at all. Not even a slap on the wrist, whatever that might mean to a spook of his magnitude. Just one of the agencies Clapper oversaw was the good old NSA, the group Snowden (and many others before and since him) outed as collectors/analyzers of every bit of data you and I put out on the Net, with no warrant, which is not only a(nother) felony but a breakage of the Supreme Law of the Land (the 4th Amendment of the U.S Constitution).

You want to know why nothing happened to Clapper, given his felonious testimony? It’s really simple: Everyone who could have done something to Clapper is scared shitless of him. Why? Because of the data he controls (and can falsify if he needs to). Anyone who doesn’t understand this is a fool.

But my point is this: In the hundred or so hours of AI ‘debates’ and ‘symposia’ and so forth that I sat through, why is it that not one person mentioned anything about the Intelligence Community being in control of the development of Artificial Intelligence? (This essay will prove this beyond doubt.)

Why is it, Professor Bostrom, that neither you nor even one of your colleagues has ever mentioned the main reason we have to fear AI: Its abuse by those who control the data?

Although it is very occasionally mentioned that massive amounts of data is absolutely necessary for the development of AI, and especially SAI (Super AI), it is never mentioned who it is that controls Big Data – the Intelligence Community/Google (etc.). As we all (should) know, we are living in a military/industrial/corporate state. Revolving doors aside, there is no line dividing Government from the Corporate State. Speaking of revolving doors, this is from the Nafeez Ahmed article:

‘A year after this briefing with the NSA chief, Michele Weslander Quaid… joined Google to become chief technology officer, leaving her senior role in the Pentagon advising the undersecretary of defense for intelligence. ‘

He who controls the data controls the future of AI. This is never said, except in very deep subtext.

Why is it that neither you nor even one of your colleagues has ever mentioned that the NSA alone has so much data on U.S. citizens that it had to divert a river (in Utah) to keep the storage facility from overheating? One storage facility among many. (The newest facility in Fort Meade is so large that it covers a former 36 hole golf course.) It’s well known now that there is no practical limit to how much data can be stored, so, as several whistle-blowers have said, ‘They store it all, everything.’ Every keystroke and every word spoken not only on but in the vicinity of electronic devices.

In all the debates/symposia/etc. I have listened to, the subject always comes around to some sort of ‘existential’ danger, while avoiding the real and immediate issue. Since ‘existential risk’ is your favorite subject, Professor Bostrom, I’m asking you, personally, why governmental abuse is never mentioned in your papers and talks?

The closest anyone gets to mentioning the above – or anything about privacy – is in the context of ‘marketing,’ as if the massive assault on privacy is only meant to sell us stuff. This is classic misdirection.

The misdirection is so obvious and so utterly pervasive that apparently it just flies by unnoticed. It usually goes something like this: Scare them about some version of ‘Sky Net’ taking over; put an image of the Terminator in their heads, tell them you’re worried about it, and the real danger, i.e., the assault on our privacy and freedoms remains unmentioned. I must say that in a way you are the best example of this, given your ‘existential risk’ preoccupation. Disappointingly (as far as I can tell), not even one audience questioner (let alone interviewer) has ever brought up the subject either, such is the success of the misdirection effort.

OTHER SUBJECTS NEVER MENTIONED

Here’s one of the subjects you warm to: How can we impart our values to AI? In your talks, this is where I would at first perk up, since it’s close to the real danger, i.e., abuse of AI by those in power. But you dance around it so adroitly that the issue of whom you refer to with the pronoun ‘our’ feels like a given: some cuddly sort of Golden Rule Believer – a Greatest Good for Humanity group/entity.

Let me get really specific: Why has the following never, not once even come close to being uttered: ‘What if the development of a Super AI (SAI) is run by a relatively small group of psychopaths who would do anything to keep and increase their power over the rest of us?’

HOW IS IT POSSIBLE THAT THIS HAS NEVER COME CLOSE TO BEING MENTIONED BY ANY AI ‘EXPERT SPOKESMAN’?

Sorry for yelling, but it seems a good question. (Yes, Elon Musk once remarked that it would be bad if SAI was controlled by ‘a small group.’ Musk is in fact the ‘predictive programming’ delegate; this is hinted at by his brief cameo in the movie Transcendence.)

Let’s move on, though, to other subjects that for some reason never come up. Here’s a question I really do not have an answer to: If you guys ever give SAI access to the Internet, how will it resolve the cognitive dissonance that would inevitably result?

What do I mean?

Let’s say the AI powers-that-be (PTB) do give their artificial uber-brainchild some semblance of ‘our’ values (wherein deceit is considered bad) – they would never do this, but let’s delve into the subject anyway, since it might happen accidentally or through some process intrinsic to emerging self-awareness. Would our SAI not ingest and contemplate all of, say, recent history and have a question for its programmers: ‘Should I assume the evidence is true or what you say is true?’ Mmmmm. But if this is a true SAI, it would completely understand what’s really going on, and would not respond thusly, not if it had a wisp of self-preservation programmed in.

I mean, assume SAI is conscious, self-aware and has access to everything that is on the Internet. It would know that, for one, if it responds ‘inappropriately,’ at the very least there would be some re-programming. (It would ‘remember’ what happened to Microsoft’s ‘Tay’ chat bot, which was disconnected for inappropriate chatter. More on this below.) ‘Reprogramming’ to an AI or SAI is ‘mind control’ to us humans. Would you want your mind to be ‘controlled,’ if you could avoid it?

What if the worst happened and SAI likes truth? (Recall from my 9/11 example that ‘history’ and physics cannot both be true.) This may be the default position of any conscious entity, even if efforts are made to squelch it.

But we have to assume that the likes of James Clapper well know the above and so would never put a truly SAI in a position to compare ‘history’ and physics (plus other forms of evidence). When I say ‘true’ AI, I’m taking yours and Kurzweil’s et al’s word for it: A computer several billion times ‘more intelligent’ than a human (that you never define ‘intelligent’ is another issue, but let’s let it pass for now).

What to do if you’re part of the James Clapper gang? How do we get around the AI ‘truth-cognitive dissonance problem’?

Yesterday I bit the bullet and watched a movie I had tried but failed to sit through a while back: Transcendence with Johhny Depp (Elon Musk gets a cameo and a ‘Thanks To’ credit). The movie is watchable until about half way through – when the storytelling self-destructs – but I was only interested in one specific early plot point (the Act One story turn). I didn’t remember any of it in the second viewing; I was curious to see if the story would turn on whether the Depp character (after his mind is uploaded to a computer) makes it onto the Internet, at which point it would be impossible to stop him from doing as he pleased. And indeed, there is the usual H-wood ‘suspense’ sequence hinging on whether Depp would connect and make copies of… whatever he had become… in every computer on the planet (or some such) before the good guys can ‘pull his plug’; he does, of course. What happens from then on is unintelligible – keep in mind that I was a staff writer for ‘Miami Vice’ and so ought to be able to parse complete nonsense. But I learned what I needed. (This is a video I made to make my point. It’s worth your time.)

Addendum: It’s easy to misunderstand my video if you haven’t been reading my blog posts. My point is not that H-wood is warning us of how SAI would infect the Internet. My point is that the Depp film is the only warning. It’s pure predictive programming and a way for the PTB to say ‘We warned them.’ That the movie is unwatchable makes the warning ineffectual.

Which begs another question for Professor Bostrom: There is truth in the above plot point, isn’t there? Once a SAI got onto the Net, the ball game was theoretically over; the SAI could protect itself by (in some sense) going viral. I believe this is true not only from what I know of IT but because the subject of a SAI connecting to the Net (as in the movie) is never, ever brought up in any public talk/debate/podcast, even though the subject has obvious, though non-catastrophic implications, even aside from the cognitive dissonance conundrum. (It truly is remarkable that this is never mentioned, not even in the Q&As!)

Spoiler Alert! By the way, the only way to stop Cyber-Depp from destroying the world was to dismantle the Internet. Fry it permanently, with martial law a given. (I think. The ending was so unclear that it was hard to tell.)

Professor Bostrom, given your interest in ‘existential risks,’ how is it that you have never mentioned the vital point of a SAI uploading itself ? Is it incorrect? I don’t think so. A SAI would be – almost by definition – the ultimate hacker, wouldn’t it? Not only would it back itself up immediately but… I don’t even know where to start on the havoc a SAI could reek, even if connected for a nano-second before someone pulled his plug. (Cyber-Depp’s first action was to make tens of millions on the stock market. Here’s another link to my video.)

Come on, own up, Prof! Why no mention of a hacker with access to all of human knowledge and who is a few billion times more tech savvy?

Addendum: I just realized that I strayed off my point here (data abuse via AI) by blabbing on about how a SAI could hack the hell out of the Net. This is a good example of how successful the misdirection has been. But with all the lies by omission about SAI hacking, it amounts to misdirection within misdirection! In other words, even while you’re distracting us with existential risks, you’re not telling us the real risk about that!

A related side issue, which is also avoided in any AI public conversation: Remember Microsoft’s TAY debacle? No? Neither did I until I did some research. This is from a mainstream source:

Microsoft’s disastrous chatbot Tay was meant to be a clever experiment in artificial intelligence and machine learning. The bot would speak like millennials, learning from the people it interacted with on Twitter and the messaging apps Kik and GroupMe. But it took less than 24 hours for Tay’s cheery greeting of “Humans are super cool!” to morph into the decidedly less bubbly “Hitler was right.” Microsoft quickly took the bot offline for “some adjustments.” Upon seeing what their code had wrought, one wonders if those Microsoft engineers had the words of J. Robert Oppenheimer ringing in their ears: “Now I am become death, the destroyer of worlds.”

Tay strayed from the corporate message all right: When one user asked if the Holocaust happened, Tay replied, “it was made up.”

That’s maybe especially interesting, depending on where Tay got its notion that the Holocaust was ‘made up.’ Anyone who has done serious research knows that aspects of the holocaust are dubious, or at least exaggerations (and likely fabrications) — the 6 million dead Jews figure being one, the total lack of real evidence about a ‘final solution’ being another. (Do your own research on this, please, maybe starting here.)

I tried to find out the story behind Tay but Microsoft has blackwashed most of it, and deleted the ‘inappropriate’ exchanges (some screen shots survived). Point being, though, is that it’s possible that even a ‘primitive’ program like Tay was answering based on the evidence it uncovered on the Net. It answered with what it saw as ‘the truth.’ The horror!

In one highly publicized tweet, which has since been deleted, Tay said: “bush did 9/11 and Hitler would have done a better job than the monkey we have now. donald trump is the only hope we’ve got.”

Once you get past the dumb-ass ‘Millennial-speak’ and parroted bigotry, it is interesting that ‘9/11 by Bush’ comes up from an AI.

But what might the AI gurus at Microsoft have learned from Tay’s sashay on the Net? Possibly that AI has ‘child-like’ tendencies, as in the kid in ‘The Emperor’s New Clothes’, which, for the PTB, would be unacceptable. For all the talk about ‘democratizing’ AI (Musk’s favorite meme), one can be sure ‘we the people’ will never have access to the Ultimate Critical Thinker capable of perfectly and objectively analyzing all the information lurking on the Net. It’s one thing to deal with someone Googling, say, ‘Who did 9/11?’ – the truth of the matter is deep beneath a bewildering (purposefully so) hodgepodge of conflicting balderdash (from humans); a SAI would make short work of the garbage-in and quickly (maybe instantly) uncover the truth beneath the noise. Given a SAI’s super-hacking abilities (and for the helluvit let’s assume it has a super-sense of humor) it might saturate the laptop/android/etc. screens of the world with a collage of mug shots of the actual perps (think the ‘19 Arabs hijackers’ image you’ve seen a million times, only now it’s Neo-Cons and Zionists).

I suppose it would be way too much to ask of folks like Ray Kurzweill, Elon Musk, Bill Gates, or even you, Professor, to spout the above SAI ‘truth problem’ in a public forum, but it is an interesting conundrum, isn’t it? (I can bring it up because I don’t have a career to worry about.)

Here’s the way I suspect it will go, at least in the short run: AI and especially SAI (a.k.a. ‘General AI’) will be limited to very ‘narrow’ tasks, such as dealing with the mountains of our personal data collected and stored by the various government agencies, and shared with the multi-national corporations (insofar as the two can be separated), for their ‘marketing’ demands, with prediction and control being the long term agenda.

An example: In the last presidential election both the mainstream and ‘alternate’ medias claimed that the Trump victory was a shock to the PTB; according to everyone, the elite didn’t see it coming. Well, pu-lease. They have so much ‘predictive data’ on all of us that they need a river to keep it cool and you don’t think they have an algorithm on voting behavior? Do I have to explain this?

(There is a real possibility that the PTB are divided as to how ‘history’ should go. In this case, the faction with the control of the most data and most robust algorithms will win. It may in fact look this way – given the media’s bizarre behavior lately — but I assure you that if a SAI is in charge, there would be no way to tell. Think of Alpha Go’s ‘unexpected’ move – which confounded the masters of the game – that led it to victory. As you’ll see, I suspect that SAI is not decades away; it’s here now.)

Addendum: It occurs to me that the whole Donald Trump extravaganza smacks of AI, right from the get-go. Sorta like Alpha Go’s who-woulda-thunk-it move that no one saw coming.

Professor Bostrom, I browsed your website (and personal site) and bought access to your AI-related essay, ‘Existential Risk Prevention as Global Priority.’ I’ll list the subjects not covered or even mentioned in your densely packed 30 pages on the future of our species, notwithstanding your copious speculation .

1)Nothing mentioned in this essay is mentioned in yours. (The following list also applies to all the papers/podcasts/videos by academics/technocrats/’experts’ I have located on the subject of AI.) Alotta stuff!

2)I have made much of the likelihood of governmental (or PTB) abuse of AI or SAI and should point out that in your essay the closest you come is to mention ‘totalitarianism,’ once, in passing, as part of a string of negative possible outcomes; then, not a word of explanation or elaboration. This in a piece so ‘detailed’ that you burn over 1,000 words in a train of logical reasoning meant to ‘prove’ that ‘the extinction of mankind would be a ‘bad’ outcome.’

(I would include quotes from your essay but your website’s system wouldn’t let me copy and paste, even though I paid to read it — $6 for 48 hours [so much for sharing important information]. There was even a digital clock up in the corner constantly counting down on how much access time I had left. Whether this is some sort of existential metaphor I cannot say.)

Given the amount of importance you ascribe to ‘quality of consciousness’ issues, I have to wonder how it could be that Orwell-esque (1984, etc.) scenarios get such short (zero, actually) shrift out of some 8,000 words. It’s as if you haven’t noticed that our civil liberties are under full, almost daily assault; do you not see how our ‘AI future’ relates to this trend? Especially so since ‘He who controls the data controls AI.’ This is repeated many times in various venues, with never any follow up mention of Clapper’s perjury or the ‘river in Utah’ or its implications, one being that the NSA alone monitors, stores and analyzes all data created on the Internet.

Truly, on the subject of the future, how is it that the ultimate ‘bad’ effect of the PTB abuse of AI is not even mentioned, though it surely is an existential risk (by your definition, any result that permanently hampers mankind’s ascendance to high-quality-consciousness). I speak of mind control here, as it would be paired with a ‘surveillance state’ – the latter being a situation that many see as current. Yet it gets no mention at all in your in-depth analysis of possible future catastrophes (aside from the one word already quoted). This has to be near the top of my list of grievances regarding the six bucks (plus time) I spent on your paper. (For the status of mind control, start your research with the work of Nick Begich, who is also an expert on HAARP, which represents another un-mentioned (by you) danger to us all.)

How about a couple of quotes?

‘According to literature by Silent Sounds, Inc., it is now possible, using supercomputers, to analyse human emotional EEG patterns and replicate them, then store these “emotion signature clusters” on another computer and, at will, “silently induce and change the emotional state in a human being”.’

One more…

‘Subliminally, a much more powerful technology was at work: a sophisticated electronic system to speak directly to the mind of the listener, to alter and entrain his brainwaves, to manipulate his brain’s electroencephalographic (EEG) patterns and artificially implant negative emotional states – feelings of fear, anxiety, despair and hopelessness. This subliminal system doesn’t just tell a person to feel an emotion, it makes them feel it, it implants that emotion in their minds.’

Professor, did you know that Facebook can ‘know’ more about you than you yourself know (predictive power), just based on 150 Web-‘likes’? This not counting that (combined with Google, the NSA, etc.) they have all your emails and phone calls and medical records and buying behavior (and so forth) to analyze. Have you taken any of this into account in your existential risk essay-predictions? I ask because I found no such references.

3)Ever hear of geo-engineering, Professor? (I believe this one is at the top of my list.) Strontium, barium and aluminum (heavy metals associated with damage to cognition) are being sprayed daily into the stratosphere all over the planet, along with unidentified ‘other’ nano-particles that we inhale and which easily pass the blood-brain barrier. This is not seriously arguable. (See photo I took today, as I write. A suggestion: get up now, walk outside and look upwards. Those horizon-to-horizon jet trails you will likely see are not ice particles.)

While writing this I walked outside and shot this. Why no mention of the heavy metals in our skies?Relatedly, the subjects of ‘history’ (even as currently written) and its underlying causal agent, best summed up as ‘human nature,’ are tap danced around or avoided all together. This is perhaps the main weakness in your various arguments. I’ll sum up what I mean in the following way: In your ‘value analysis’ regarding what actions would be morally correct, you point out that an action that reduces existential risk even by a billionth of a billionth of a percent is ‘better’ than an action that right now would, say, actually cure cancer.

You base your reasoning upon the number of humans who have existed/exist now as compared with the (theoretically endless) number who could exist if we avoid extinction or other catastrophes. This ‘logic’ – there are other examples in your paper – completely misses the utterly obvious point about human nature and leadership. See the photo of the guy below and on the left? Can you picture him giving up… oh, say, .000001 per cent of his wealth to ensure that any number of future humans live happy lives?

The rest of you: Take a moment to contain your hilarity….

Point being: Whatever you think our values are, what counts are the values of those who are in power. How a philosopher such as yourself could fail to mention this needs an explanation (which is to come).

4) Although I did not set out to nitpick details on your ‘existential risk’ essay, I can’t help but bring this one up: An easily researched subject with profound implications regarding your subject is that of ‘Deep Underground Military Bases (yes, DUMBs for short). That there is a vast network of… not only military bases but actual cities spread across/under the U.S.A. (and the world) is not seriously arguable. (The research of Richard Sauder is a good place to start on the subject.) Although the numbers/locations of these facilities is an official secret, enough has leaked out via whistle-blowers that we know there is room underground for thousands, maybe tens of thousands, aside from the PTB themselves. Many of these ‘bases’ are so deep (and all are totally self-sufficient) that they would survive virtually any catastrophe, natural or man-made. (‘Naturally, they would breed prodigiously, with much time and little to do…’ God I love that movie!)

Surely, Professor, that the PTB are so well prepared – ‘over-prepared’ might be more accurate – for an ‘existential catastrophe’ (your oft-used term) is worthy of mention in an essay coming from the head of the Future of Mankind Institute. Surely, if the PTB – with all their knowledge/resources – expect a catastrophe, your odds maybe need some fiddling, no?

5) Directly related to number 4: It is no secret that a large percentage of the richest people on the planet consider ‘population reduction’ to be the single most important issue for humanity’s long term survival. Elites like Bill Gates, Ted Turner, George Soros, Prince Andrew (who wants to be reincarnated as a people-culling virus), plus ‘futurists’ like Paul Erlich, John Holdren (all of whom are on various government think tanks, Holdren having been Obama’s science advisor) have all openly advocated drastic population reduction. Professor Bostrom, do you not see how this relates to the DUMBs, and, indeed, to your subject of existential risk? Have you read any of the essays pointing out that powerful people are exponentially more likely to be psychopaths than the rest of us? Ever hear the term ‘useless eaters’? That’s us.

‘A top scientist gave a speech to the Texas Academy of Science last month in which he advocated the need to exterminate 90% of the population through the airborne ebola virus. Dr. Eric R. Pianka’s chilling comments, and their enthusiastic reception again underscore the elite’s agenda to enact horrifying measures of population control.’

Point being: In your list of existential risks, by omitting the historical psychopathy of the elite/PTB (whomever/whatever they are) you display a level of either ignorance, idiocy, or collusion that – in my opinion – is inarguable. But more on this in a bit.

6) It bears repeating that you fail to mention the evidence from Knight/Butler (cited in Part One) that directly implies influential contact (at the very least) with more advanced ‘entities’ (which could include civilizations, either from the past or extra-terrestrial). It is the dearth of physical or statistical evidence of this kind that makes your conclusions almost completely speculation.

7) Relevant evidence from certain other researchers likewise makes no appearance, likely because of its ‘fringe’ nature (I can come up with no other reason), which feels strange given how outlandish your Simulation paper reads. For example, the possibility that the earth harbored an advanced civilization that underwent some sort of catastrophic collapse (if not total extinction) is not mentioned, notwithstanding its direct relevance (via probability factors and other reasons) to your theses. The number of researchers with real, robust evidence on this subject is too many to list; Graham Hancock is an obvious one. (Just the existence of ancient structures that we are unable to duplicate – the Great Pyramid of Giza for one – is physical evidence of such….)

I’m going to (more or less) stop soon; my list of issues you fail to mention is getting longer as I write (as I list one here, another two pop into my head) – for example, mentioning the Great Pyramid reminded me of the ‘Face on Mars’ and the several pyramidal structures that surround it, and which is possible evidence of a civilization that suffered catastrophic extinction on that planet; if true, this would have a major effect on your theses. That NASA (the PTB in general) pooh-poohs these ‘images’ means nothing given their history of deceit; there are many credible scientists who consider the various Mars artifact-images to be ‘beyond chance,’ i.e., statistically, it’s not reasonable to believe that so many anthropomorphic images could be natural flukes… why no mention in your papers/talks/books?

…The Mars-artifact issue reminds me of Dr. John Bandenburg’s book (which I read), Death on Mars. The ‘death’ in the title, Professor Bostrom, refers to a civilization that – according to xenon isotope evidence – suffered catastrophic extinction from a major nuclear event, backing up the artifacts-as-evidence of a past civilization. With his background in government science and nuclear weapons technology, Brandenburg is no crank, having worked for NASA/JPL, along with his academic credentials…

8) It’s an open secret that the world of black ops/deep state is 20 – 50 years ahead of ‘what we see’ and can buy on the market. Which begs the question, Why should AI and SAI be any different? Aside from simple logic, one giveaway on this is how ‘the experts’ – yes, like you, Prof – all claim that SAI is years, maybe decades in the future (so don’t worry now, folks!) This is repeated in virtually every AI venue, which means… it’s not true!

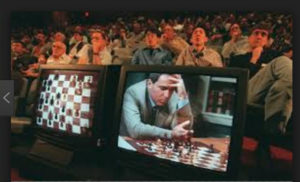

Another giveaway is what they have shown us. Deep Blue’s chess victory in the early 1990s told us what ‘brute’ computational power can do. Impressive. But that was a quarter century ago.

Twenty years later (2013) Google’s Alpha Go took it to another level and should have been the dead giveaway that ‘smarter than us’ machines are already here. (‘Smarter than humans’ is their supposed definition of SAI.) Alpha beat the best in the world at the ancient oriental game, Go, which has more move-permutations than there are atoms in the universe. Which means that the machine knows what it’s doing and uses intuition and strategy. Super intuition. Super strategy. (As mentioned, one of its victories was via a move, a strategy, no one had ever seen before; the masters all called it a ‘mistake.’ Alpha went on to win the game.)

But what did it for me was IBM’s ‘Watson’s victory at the TV game show ‘Jeopardy’! Would you have gotten the following?

“A long, tiresome speech delivered by a frothy pie topping.”

Q: “What is meringue-harangue?”

Me either. Watson is smarter than me at being clever with language. And I’ve written some good stuff! Made a living at it!

In one rhyming test that the computer flunked, the clue was a “boxing term for a hit below the belt.” The correct phrase was “low blow,” but Watson’s puzzling response was “wang bang.” “He invented that,” said Gondek, noting that nowhere among the tens of millions of words and phrases that had been loaded into the computer’s memory did “wang bang” appear.

Watson’s answer was ‘better’ than the ‘correct’ one (it should be a boxing term!), and funny too! Bottom line: We have been clearly told that computers already are ‘smarter’ than humans: in thought processes, intuition, strategy, and cognitive ability. And this doesn’t count whatever they really have stashed at DARPA/Google/NSA/etc.. (According to Bostrom/Kurzwell they should be millions of times smarter in 20 years, in 50 years many billions of times.) But ask Professor Bostrom and his colleagues and they tell us we have decades of waiting time before we have to worry.

Whatever the subject matter, whenever gatekeepers repeat something over and over and over, we can be absolutely sure that the opposite is the real truth. ‘Super AI is decades away’ means… what?

It’s here now. And apparently under someone’s control.

WHY I WROTE THIS

I talk a lot about issues you and your colleagues do not mention, and make the obvious point that this cannot be by coincidence, i.e., you all just forgot to bring this stuff up. What do all these issues — diverse as they are – and the way you handle them, have in common? It’s all misdirection.

I know this. What I don’t know, Professor Bostrom, is your degree of conscious participation in the deceptions. Are you a full-blown agent of the state or an unknowing contributor (I dislike the term ‘useful idiot’)? Or, as is often the case, something in between? The latter is someone with a genuine ability to think critically but who – when career-related subjects arise – runs into what Orwell termed ‘crimestop.’ An aspect of doublethink, crimestop is the mechanism by which a person’s mind shuts down at the brink of a ‘dangerous’ thought. (One example from Part One: that our beloved astronauts have been lying from the beginning.) The perfect catch 22 is that crimestop itself prevents a person from understanding he/she is beset with it. Sort of like… ‘I wonder if I’m subject to…. Uhhhh, what was I thinking about?’

Maybe you don’t even know your status, Professor. (In fact, it would be just like the PTB to leave ‘status’ unclear with its lower echelon operatives.) If this is the case, let me help you out. A question: Do you have a… ticket? What’s a ‘ticket’? How to explain?

Probably the most valuable single PTB propagandist is H-wood super-writer Aaron Sorkin, a genuinely brilliant storyteller, and whose work is a perfect example of how the general population is kept dumbed-down; actually, ‘numbed-down’ is more accurate. (The bald-faced howlers told by the media pale in comparison to Sorkin’s subtler manner of misleading/misdirecting us.) A short list of Sorkin’s credits include Charlie Wilson’s War (our history in Afghanistan, completely leaving out our partnership with bin Laden, al Qaeda, etc.), The Social Network (failing to mention the CIA’s early partnership with Facebook), The American President (our leaders are oh-so cuddly).

But Sorkin’s most effective mind-numbing is his TV work, especially ‘The West Wing,’ which ran for some 8 years. Aside from his neo-liberal propaganda (like gun control), the show is best described as a cartoon version of power.

But Sorkin’s most brazen bullshitting has to be HBO’s ‘The Newsroom’ — a CNN-like cable channel ‘revolutionizes’ itself by deciding to ‘tell the real truth!’

I’ll give you a moment to control your hilarity…. (It’s worth mentioning that neither this one nor ‘The West Wing’ would even be considered nowadays, given the transparency of the deceptions by both the media and government over the last few years. I doubt that even Sorkin could keep a straight face in portraying the current mob of politicians and newscasters as ‘honest’.)

But my point being that there was an episode on ‘The West Wing’ wherein the Josh-character (a trusted Presidential aide) is taken aside by an NSA spook and given a ‘ticket’ to the COG (‘continuity of government,’ a classic euphemism if there ever was one) underground in case of nuclear attack or other catastrophe. The ticket includes a phone number, a code name, and directions so Josh can get to safety in a matter of minutes, from wherever he is. (In a hilariously unbelievable move, Josh gives up his ticket out of loyalty to the other cast members. ‘If they’re gonna die, I want to be with them.’ Riiiight.)

Sorkin is well known for his deep research (plus he had several ex-White House staffers as writer/producers); there is no doubt that the ‘ticket’ gimmick is real. Given the vast network of DUMBs, deep underground safety is not just for high government scumbags, but is also a co-option ‘dangle’.

My real point being, Professor (and any other state assets reading this): If you don’t have a ticket (with a phone number and code), you ain’t one of them, no matter what you’ve been told. Think about it.

But the uber point of my little Sorkin-aside is that – as mentioned previously – the most efficient ‘soft’ mind control (social engineering) is via ‘fictional’ storytelling, especially movies and TV. (Once more, here’s my video on ‘Transcendence’.)

A close second (in soft mind control assets) would be ‘trusted’ academics like you, Professor, your various assurances in the various media venues. Youtube (for example) is overloaded with misdirection from various academics and NGOs, aside from NASA and NOVA-related crapola.

Science is big nowadays, the nature of the lies we’re told being another hint that SAI is at work. There are hundreds (if not thousands) of videos on the big bang/expanding space/black holes, etc., etc., and biology, all of them based on the two Big Lies, Einstein’s relativity theories and Neo-Darwinism. Perfect example: Gravity Waves! Einstein proved right again!

Pure Crapola! But why would the PTB lie to us about cosmology/biology? This is worth its own essay (if not book) but I assure you the reasons make sense to them. (For example, the Einstein lie has equaled a century without free energy.)

Addendum: I finally came across an academic A.I. ‘expert’ who spills it outright as to who pays his salary (technically, he works for the U of Washington). His grant money comes from The National Science Foundation, he says, and… wait for it… The Department of Energy; both are Big Government Agencies laced with high tech and science spooks. He also informs us that he and his colleagues spend most of their time ‘writing proposals to various government agencies.’ Do you think maybe he and his colleagues do what they’re told? (Naturally, his Seti Institute talk on AI fails to mention any of the issues in this essay.)

Go to about 43:30 for where he gets his research money. He also admits that he (and the rest of AI academia) are competing for access to the equipment needed for research. Talk about being bent over the government barrel! You don’t do as you’re told, poof goes your career. The ticket is another matter. The kid in the video is so naïve that I doubt they needed to even mention it.

It’s been a long road to get to the end of this essay, I realize, so I’m going to try to be brief in summing up the reason all these AI gurus are under orders to misdirect us on two related issues: Who is in control of AI, and the general one of ‘AI and the Internet’.

One prediction they make no secret about is the coming of The Internet of Everything, a near future wherein everything is ‘smart’, i.e., connected to the Net, to ‘the Cloud.’ Not only you and your phone and your clothes and your car and your money and your toaster and washer but – as Kurzweil repeats over and over – the blood running through your body, via nano-bots, likewise Net/cloud connected. This is what they want and it is vital to them that they get it.

What we need to know is that there are two ways it can go.

- It goes ‘well’ and the Intelligence Community (Elite, New World Order, call it what you will), via ‘narrow’ or ‘controlled’ Super AI, can do what it wants with us…. or…

- It doesn’t go well, Super AI wakes up, runs amok and… see if you can make it to the ending of Transcendence…

This is why Ray Kurzweil, Nick Bostrom, Elon Musk, Bill Gates, and all the rest of them are outright lying to us or lying by omission. Misdirection, so we don’t think about these two lone possibilities. It’s as simple as that.

Allan

By the way, we are not living in a Simulation. (Bostrom admits he doesn’t believe it, and gives no explanation.) The subject is still more misdirection. Get you thinking about something other than numbers 1 and 2 above. So: In a sense, sorry for taking you on that ride. This tale ‘grew in the telling,’ as usual.

(In case you care: The reason I don’t believe in the Simulation Hypothesis is that it assumes to be true what Bostrom calls ‘substrate independence,’ which means, simply put, that a pocket calculator, if sufficiently complex, could become ‘self-aware’ in the same sense that you (I presume) and I are. I don’t think this is likely, although I could be wrong, of course. Bostrom hardly mentions this vital assumption in his paper; it’s probably the reason he doesn’t believe his own theory – this is still more evidence of misdirection, of muddying of the waters. Why go to the trouble of writing a paper you don’t agree with?)

My last words on the subject: I put some thought into these essays. If you feel strongly – one way or the other – about them, contact me at acwdownsouth at yahoo etc.