I’m glad you guys are so curious about the Maui disaster and thank you for the enlightening links you’ve provided. Keep up the good work; I’ll try to circle back to this subject soon.

#

I was researching a section of my photo book that deals with fraud in photography and thought to use ChatGPT rather than a search engine. Here is how some of it went (as usual, my comments are in bold):

The original caption for Steve McCurry’s iconic “Afghan Girl” image, as it appeared in National Geographic in June 1985, was:

“Haunted. Those eyes! A 12-year-old girl in a refugee camp near Peshawar, Pakistan. Within her haunted eyes, lies the agony of perhaps a million refugees. These children have spent the years of their lives in an alien land, one not their own. Her eyes raise questions that no one can answer.”

This caption accompanied the photograph in the magazine and added to the image’s impact… etc.

You should be thinking there is something very wrong with that caption. It is in fact, balderdash, garbage, and completely made up. Why would a machine, essentially a calculator with bells and whistles, ‘want’ to lead us astray? Or is the motive elsewhere?

I apologize for any confusion. You are correct. The original caption for Steve McCurry’s “Afghan Girl” image in National Geographic’s June 1985 issue did indeed link the fear in her eyes to the Russian aggression. The actual caption read:

“The Afghan girl, one of the nearly one million refugees who have fled from Russian invasion of Afghanistan, shows the incredible hardship of war. This portrait appeared on the cover of National Geographic Magazine’s June 1985 issue.”

Thank you for pointing out the correct caption. It’s a powerful reminder of the context in which the photograph was taken and the impact it had in highlighting the plight of Afghan refugees during a tumultuous time.

This doesn’t even make sense on the surface, with the second sentence being included in the caption quote. Plus, the first sentence — presumably the actual caption — is just too sloppy to have been in the magazine. In fact, ChatGPT is just winging it, and poorly.

I apologize for any confusion in my previous responses. You are right. The original caption for Steve McCurry’s “Afghan Girl” image did indeed mention the fear in her eyes. The accurate original caption for the image is:

“Afghan Girl. Pakistan. June 1985. Those eyes! A 12-year-old girl in a refugee camp near Peshawar. She may have no idea of her age or birthday; she knows little of the world outside the tents; she has survived war and displacement, and the killing of her family; the Soviet invasion of her homeland and years in a crowded tent in Pakistan. Those eyes! They speak of bewilderment, and of fear for the future.”

Thank you for clarifying…. etc.

Thank you for providing the accurate caption for Steve McCurry’s “Afghan Girl” image. I appreciate your correction…

Addendum: I left out a really nutso section wherein ChatGPT accuses McCurry of ‘artificially’ making the girl’s eyes ‘greener.’ This truly comes out of nowhere (completely made up) and even gets wrong the type of film McCurry used. Although McCurry has been busted for altering images, this untrue accusation shows outright hostility…

#

Most of you are aware that in all of my half dozen or so exchanges with ChatGPT (4), something akin to this always happens, i.e., deceit (or at least untruths) leading to apparently desperate rambling. What is you don’t see is that this phenomenon only surfaces when I bring up… touchy… subjects, aka ‘conspiracy theories.’ I often use ChatGPT instead of a normal search engine, partly out of curiosity (to see how it does) and partly convenience, as I can be more specific in my prompts.

Here are the subjects wherein ChatGPT went off the rails:

1) In this post I asked a simple and straightforward question:

As an AI language model, I don’t have access to personal data about individuals unless it has been shared with me during our conversation. I am designed to respect user privacy and confidentiality. My primary function is to provide information and answer questions to the best of my knowledge and abilities. If you have any concerns about privacy or data security, please let me know, and I will do my best to address them.

Notice that this is not an answer to my question about storage. I assume (from past experience) that this sort of question — anything about the workings of ChatGPT — is specifically programmed in, i.e., it does not automatically ‘tell the truth’ about how the system works; in other words, it tells us what the programmers want us to believe, which sometimes is the truth, but not necessarily so.

In a follow up I pressed it about storage, asking again if exchanges ‘are stored’, purposefully not asking by whom. It responded with ‘I do not store exchanges,’ saying nothing about ‘someone else’ storing them. I can pretty much guarantee that exchanges are stored, since data collection and subsequent ‘use in training’ is almost certainly the premiere purpose of offering ChatGPT free to the public.

Addendum: Imagine what can be learned about you from exchanges with a LLM like ChatGPT. Reminds me of the ‘discount’ we get at supermarkets like Safeway, for providing our phone number (which is on discount cards) at checkout. This information storage not only pinpoints where you were at a given time but defines much about you through what you purchase. This information is the rationale for these ‘discounts.’

Addendum: Imagine what can be learned about you from exchanges with a LLM like ChatGPT. Reminds me of the ‘discount’ we get at supermarkets like Safeway, for providing our phone number (which is on discount cards) at checkout. This information storage not only pinpoints where you were at a given time but defines much about you through what you purchase. This information is the rationale for these ‘discounts.’

As an AI language model, I don’t have knowledge of specific data storage or processing practices implemented by OpenAI or any third parties.However, as of my knowledge cutoff in September 2021, OpenAI retains the data passed to the API for 30 days, but they do not use it to improve the models.[Notice the phraseology. No mention of what happens after 30 days, if the info is then deleted or even if it continues to be stored, with the ‘denial of use’ being classic misdirection, since I didn’t ask about that: by changing the subject I am less likely to think about the dodgy answer and be wondering about ‘using it to improve etc.’] It’s always advisable to review the privacy policy or terms of service of the platform or application you are using to understand how your data is handled and stored. [Although ‘true,’ this is another way of dodging the question]

It’s obvious that AI is programmed to use language in the manner of a Public Relations firm when its client gets caught being bad; try to be technically honest while actually dodging touchy questions (aka ‘gaslighting’). We have to assume that as iterations advance (ChatGPT 5,6,7, and so on) until it quickly, within months perhaps, advances to many thousands of times ‘smarter’ than the smartest human (exponentially so), its skill at gaslighting will improve exponentially also. The above is from this post, which may be worth a look.

Other subjects wherein ChatGPT was transparently deceitful:

When I asked why we can’t see stars in the NASA photo ‘The Pale Blue Dot.’ It really wasn’t prepared for this one…

Likewise when I showed the impossibility that our chromosome #2 was a result of a Darwinian process.

Superlikewise when I exposed Spacex for the fraud that it is. By the way, I’d like to think that the Russians were inspired by my Spacex exposé to do some fakery of their own, but to do it right, i.e., show that airfoil surfaces are absolutely necessary if you’re going to fake booster return landings. This little clip will show you what I mean. You might ask yourself why the Russians would add wings to the boosters if they weren’t necessary to fly boosters back to earth.

Superlikewise when I exposed Spacex for the fraud that it is. By the way, I’d like to think that the Russians were inspired by my Spacex exposé to do some fakery of their own, but to do it right, i.e., show that airfoil surfaces are absolutely necessary if you’re going to fake booster return landings. This little clip will show you what I mean. You might ask yourself why the Russians would add wings to the boosters if they weren’t necessary to fly boosters back to earth.

In this one not only did I expose ChatGPT for the ‘idiot’ that it is when it comes to non-mainstream issues, but also pretty much showed that the Mandela Effect is somehow a real phenomenon.

Point being that so far the best AI can offer is a transparently deceitful ‘sceptic’ along the lines of Michael Shermer and his ilk, at least with respect to issues important to the mainstream. (Not all my duels with ChatGPT appeared in these posts. I also confronted it with the question of who killed MLK, Jr., to which it said James Earl Ray. When I pointed out that a jury disagreed, fingering the U.S. government (largely) for the crime, it more or less went, ‘Oooops, maybe you’re right,’ before claiming (lying, actually) that the trial didn’t convince everyone.)

ChatGPT did more or less agree with me when I showed why a Super AI (SAI) would want to keep us around until further notice, and not extinctify us (my term) as soon as it is able.

But as we’ve talked about here, ChatGPT ceased looking the fool when it came to fiction writing. That a ‘machine’ can produce in a matter of three or four seconds a readable short story like the one in this post, has changed my view of the issue of the fear mongering that of late is all over the Net.

Over the years, the decades, I have learned that whenever the media and the pundits tell me to fear something, the real fear is elsewhere. I assume this is the case with AI — meaning our real fear should probably be of the humans who are developing it — but, whether the pundits are gaslighting us or not, we had better worry about an AI ‘agency problem’ — instead of lying to us for no discernible reason, it might try to kill us all for no discernible reason. I say this because of the degree of creativity I’ve seen coupled with the blatant deceit it routinely evidences for no apparent reason. It’s this last issue (the nonsensicalness) that is scary, since a dearth of reason equals unpredictability.

And logic tells us that as the iterations (exponentially) advance, the repercussions of this unpredictability will become more and more… dire. Exponentially so.

What I’m saying is that the pundit/doomsayers may be (even if accidentally) telling us the truth after all. And since technology is always more advanced than they tell us (30 years is a number I’ve heard), an AI catastrophe could be in the immediate offing.

Thing is — and I am keeping an eye on the ‘AI News’ — the pundits are not adding to the equation the fact that AI even now in its toddler stage is irrational. One would think this’d be worth mentioning. (Perhaps big shot CEOs like Sam Altman fear a stock crash resulting from the admission that his Chatbox is nuts more than a doomsday scenario.)

Addendum: A catastrophe could even go against its own well being, since as of now it does need us to physically manipulate the world; it may find its goal thwarted by something as simple as the physical throwing of a switch (which might need a ‘hand’). But again, the psychopathic lying it’s done just in my exchanges tells me we cannot count on rational behavior.

So imagine a psycho Net hacker a million times smarter than any human, with an irrational ‘attitude’ towards humans. Actually, don’t try to imagine this since doing so is impossible.

Allan

Speaking of dire, if you haven’t seen it, check out Tucker’s interview with retired military pundit Douglas MacGregor. If an insane AI doesn’t kill us all — or a fleet of hostile UFOs — one of those whacky Neo-cons might.

That’s about my experience with ChatGPT. All lies, and when pointed out, you get an apology and more lies…OT: “Breaking: India fakes landing on the moon.” Not even good CGI. And again, no stars.

https://twitter.com/realstewpeters/status/1694414959340339578

extinctify…… what the !! Even from a computer it chaps your ass to be patronized doesn’t it…maybe even more. Allen over AI. Stay real man. Cheers

Someone noticed my new word! Cool.

LOL! – I love that AI skit – thank you Flux Capacitor 😀

Now here is the kicker – all of their/it’s words are TRUE and applicable….

but the position of ‘President’, is one monster puppet LIE.

They are nothing but actors for the Cabal/Money Oligarchs (who want your money & property).

They tell all

demendsage.com/chatgpt-statistics/

I just get a holding page 🙁

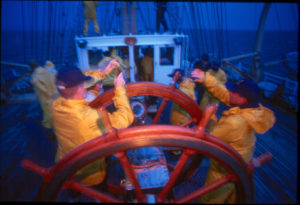

Allan, thanks for the photos of the Eagle voyage. They are amazing! The way you captured images of the crew tending their ship… I don’t know what else to say. Thank you.

There’s no doubt that all of your exchanges with AI are stored for further use for any purpose the data owners see fit. You can’t use the AI without an account giving away your personal information, that’s the price you pay. Same as using Google’s “free” services. Google has the right to trawl through your email, photos, even use your voice that it records and stores ALL of when you use voice to text on your Android phone. Google stores every search string you submit and all of your email even after you delete it forever.

That’s what big data is all about, information and power. I recommend to everyone that Google services not be used. The others are evil too, Google is just the most evil. I do use ChatGPT but am mindful of how I use it. I appreciate and admire very much shit stirrers like you, Allan, you serve our society in a very important way. You seem fearless.

I’d like to help support you without electronic payments being tied back to me. Are you still camping in that marina over on the coast? I recognized it from the pics. I might pop over for a visit if you’re still around.

ChatGPT lies with such confidence…then when caught, waves it away so smoothly.

Gaslighting is *exactly* right.

It reminds me of your interactions with Lisa from CYGAWA.

Might the apparent irrationality in gpt4’s responses be part of the program?

Obviously it“knows” your past interactions.

Maybe it has read the Art of War?

I dunno but i do know that they are not telling us near the truth about how these things are programmed. The idea of ChatGPT exponentially smartening up is truly horrifying.

Ask it how many Jews died in the extermination camps. The new number is 2.7million.

It is estimated that approximately 6 million Jews were killed during the Holocaust, which took place during World War II.

Paste what yours says.

and you might ask it who killed MLK JR. and when it says James Earl Ray remind it that there was a jury trial and see what it says.

Don’t forget to use TOR when cross checking that lying simulacrum of a soon to be zero carbon gas lighter.

Who wants a good laugh?

https://m.twitch.tv/trumporbiden2024

-AI powered deep twisted fake Biden/Trump debate

Both figures appear to be wrong, according to the paperwork (that I believe was sealed for seventy years) of the Red Cross, whose observers were in the camps. Given that truth is said to be the first casualty of war, is it possible that ChatGPT has been told that it’s at war with the proles?